WaveformMAE: Self-Supervised Learning for Fault-Inversion-Free Reconstruction of Ground Motion Waveforms Across the Entire Area

Abstract

We propose an innovative approach utilizing self-supervised learning and Masked Autoencoder (MAE) to reconstruct ground motion waveforms throughout the area without relying on fault inversion. Preprocessing involves transforming three-channel waveforms into one-dimensional word vectors for Transformer encoding. During training, a significant portion (75%-99%) of station information is randomly masked, allowing MAE to reconstruct seismic waveforms within specific spatial ranges. Inference involves dividing longer waveforms into segments and performing weighted averaging for improved predictions. The training dataset is constructed manually based on publicly available source model datasets and pre-calculated Greens functions. This approach shows promising potential for predicting seismic waveforms across the entire spatial domain, enhancing our understanding of seismic events and improving earthquake risk mitigation strategies.

Earthquakes, especially large ones, usually pose immense hazards to human life and property safety. Understanding seismic waveforms is crucial for effective earthquake-resistant structural design. However, traditional methods for obtaining seismic waveforms at various locations require two steps, the inversion of fault conditions and forward modeling.

In this study, we propose an innovative approach with self-supervised learning and the Masked Autoencoder (MAE) as the backbone, drawing inspiration from the field of image reconstruction. In this method, ground motion waveforms all around can be reconstructed within only one step. Seismic data are preprocessed the by transforming three-channel waveforms with 256 samples (equivalent to approximately 128 seconds of data at a frequency of 2 Hz) into one-dimensional word vectors. This allows us to apply Transformer encoding.

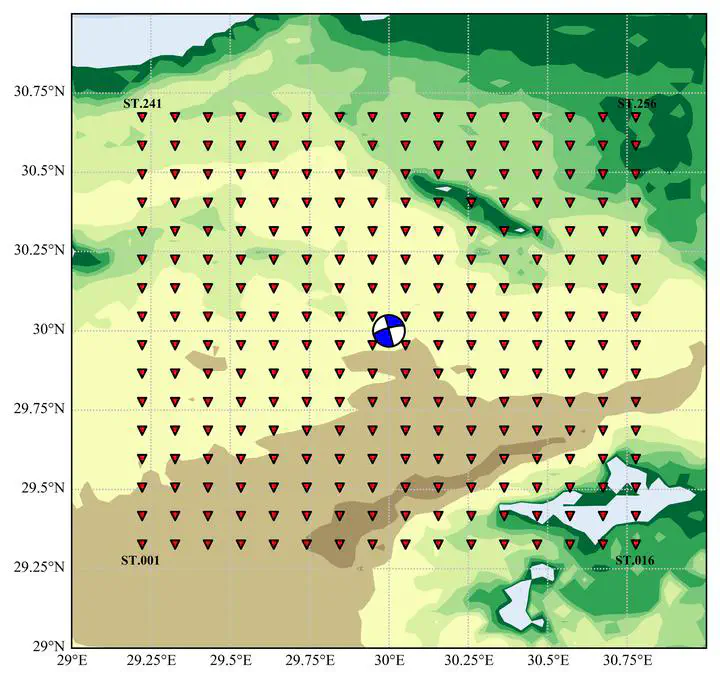

To construct the training dataset, we manually calculate the ground motion waveform spatial distribution based on publicly available source model datasets of hundreds of earthquakes, convolved with pre-calculated Green’s functions at specific area.

During the training phase, we randomly mask a significant portion (75%-90%) of the station information. The masked data is then passed through an encoder and decoder within the MAE framework, enabling us to reconstruct the seismic waveforms within a specific spatial range. For inference, when reconstructing longer seismic waveforms, we can divide them into segments and perform weighted averaging on overlapping sections to enhance the accuracy of the predictions.

This approach shows promising potential for predicting seismic waveforms across the entire spatial domain without the need for traditional fault inversion procedures. By leveraging self-supervised learning and the MAE framework, we can overcome the limitations imposed by sparse station coverage, ultimately enhancing our understanding of seismic events and improving earthquake risk mitigation strategies.